Prismos as an AI-first product

At Prismos, we often say we're working on an "AI-first architecture." Intuitively, it's not always clear what that means. Here is what it comes down to.

Ever since it became clear that SaaS products could form the basis of viable businesses, the majority of such products have essentially been built as opinionated interfaces wrapped around relational databases. These products implemented domain-specific data models (for accounting, marketing, logistics - you name it), made them accessible through an API, and added a polished user interface on top. Companies with a mature data infrastructure (or a very healthy balance sheet) would typically also integrate a machine learning component into the backend stack, often in the form of classifiers acting as adjuncts to the core application logic, making decisions on topics too complex for the engineers to implement manually.

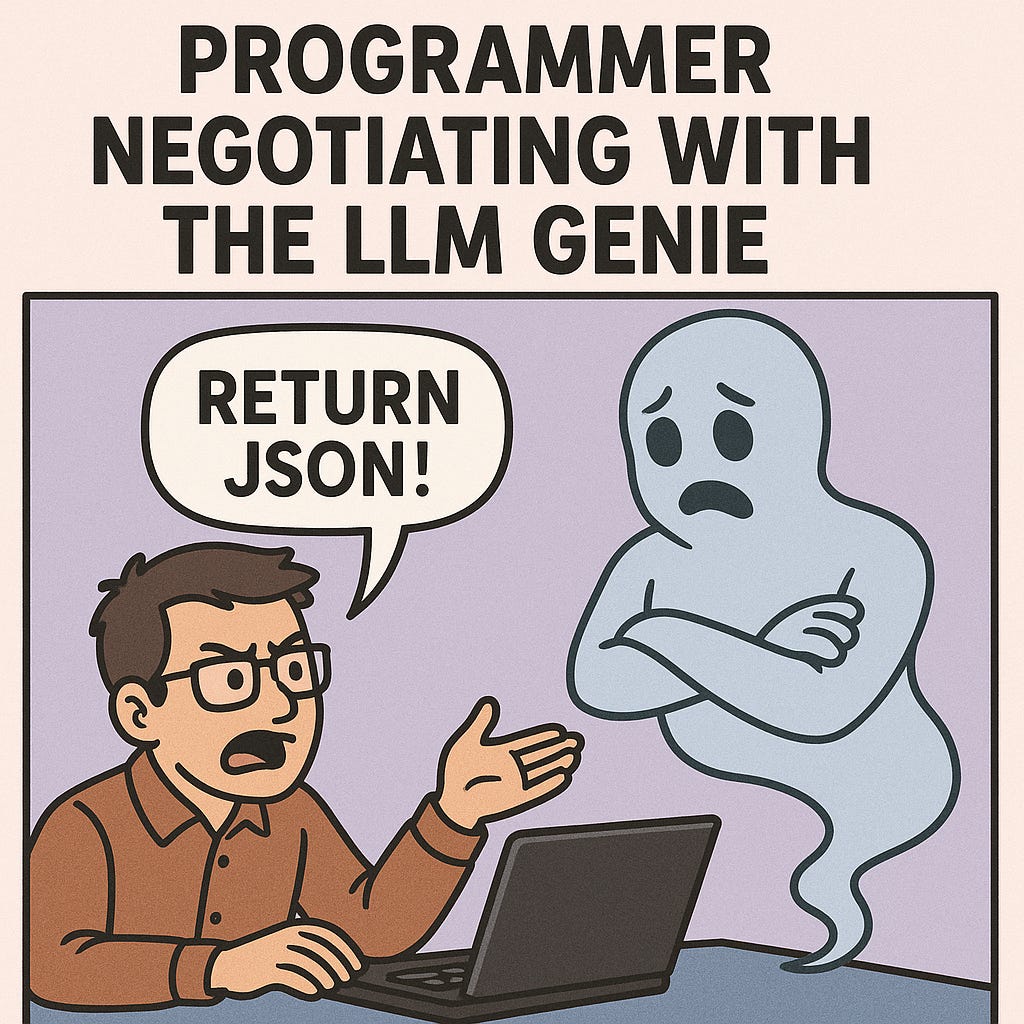

The breakthrough of the type of autoregressive decoders that we nowadays call LLMs didn't change all that much about the way backend systems were built. The generation of LLMs that were first made available to the public in late 2022-early 2023 amazed the world with their ability to generate free-form content, but they were terrible at structured output prediction, or the type of task where the output format actually mattered. And that made it nearly impossible to integrate an LLM into your backend. Even when prompted to generate parseable data (say, JSON or XML), LLMs would often just return broken or malformed structures. I've seen many people initially resort to the "try again and hope it works this time" solution, where backend code would try to parse the output to the requested format and prompt the LLM again if it failed. It was magical thinking with a compute bill - the kind of code that only exists because hype drowned out reason.

The real revolution, in my eyes, came with the commercialization of constrained decoding sometime in Spring 2023 (OpenAI announced theirs in June 2023). LLMs suddenly became capable of producing well-formed, deterministic formats. The impact of this new development cannot be overstated, as it essentially closed the gap between LLMs and traditional ML - not in terms of how they work, but in where they can be used. Suddenly, you could architect software systems where decisions were delegated to a language model with a structured interface, just like you would do with statistical learners.

This is the technology that made a platform like Prismos possible, and this is why we call our product an "AI-first" product. Not because we have an AI agent (we do). It's because we treat LLMs - alongside traditional statistical classifiers - as first-class, legitimate decision-making engines in our core stack. Not an accessory. A foundation.